Omni-Post AI — Executive Summary

One idea, three platforms, zero manual work

| Author | Aman Suryavanshi |

| Document Type | Executive Summary |

| Full Documentation | OMNI-POST-AI-TECHNICAL-DOCUMENTATION.md |

| Last Updated | December 27, 2025 |

Overview

Omni-Post AI is a production-grade AI content distribution engine that automates multi-platform social media posting while maintaining content quality and authentic voice. Built as a "Build in Public" project, it demonstrates enterprise-level reliability using free-tier APIs and intelligent AI orchestration. The system has processed 1000+ content pieces with 99.7% reliability at zero monthly operational cost.

Key Metrics

| Metric | Value |

|---|---|

| Reliability | 99.7% success rate across 1000+ executions |

| Performance | 88 seconds average end-to-end processing time |

| Cost | $0/month operational cost (100% free-tier APIs) |

| Quality | 85% engagement rate (up from 60%) |

| Time Savings | 15-20 hours/month automated |

Problem Statement

Challenge: Distributing technical content across multiple platforms (Twitter, LinkedIn, Blog) was consuming 15-20 hours per month due to manual platform-specific adaptation requirements.

Constraints

- Each platform has different formatting requirements (Twitter threads, LinkedIn single posts, Blog long-form)

- Each platform has different technical constraints (LinkedIn 1-image limit, Twitter 280-char limit)

- Content must maintain authentic voice and technical depth

- Manual repetition leads to inconsistency and burnout

Business Impact

| Impact Type | Details |

|---|---|

| Time cost | 15-20 hours/month |

| Opportunity cost | Inconsistent posting reduces reach and engagement |

| Financial cost | Commercial tools cost $60-300/month |

| Quality cost | Manual repetition leads to generic, low-engagement content |

Solution Architecture

High-Level Design

The system consists of two independent workflows:

Part 1: Content Generation (28 nodes, 48-80 seconds)

Notion (Source)

→ Content Extraction (hierarchical, 3-4 levels deep)

→ AI Processing (Gemini 2.5 Pro with XML-structured prompts)

→ Platform-Specific Generation (Twitter, LinkedIn, Blog)

→ Google Drive Storage (drafts for human review)

→ Notion Status Update (Pending Approval)

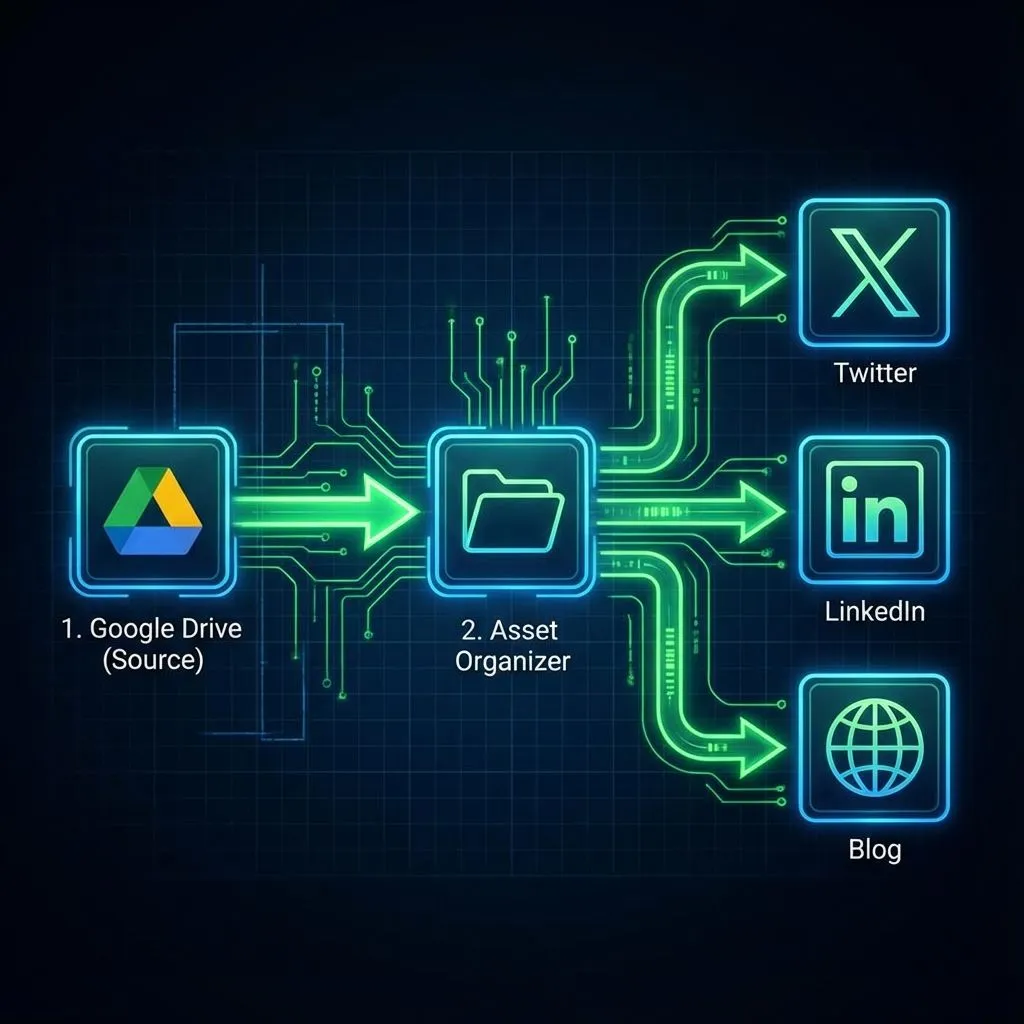

Part 2: Content Distribution (46 nodes, 17-31 seconds)

Notion (Approved Content)

→ Asset Organization (session-based file matching)

→ Platform-Specific Parsing (Twitter threads, LinkedIn posts, Blog blocks)

→ Multi-Platform Posting (concurrent execution)

→ Status Tracking (partial success handling)

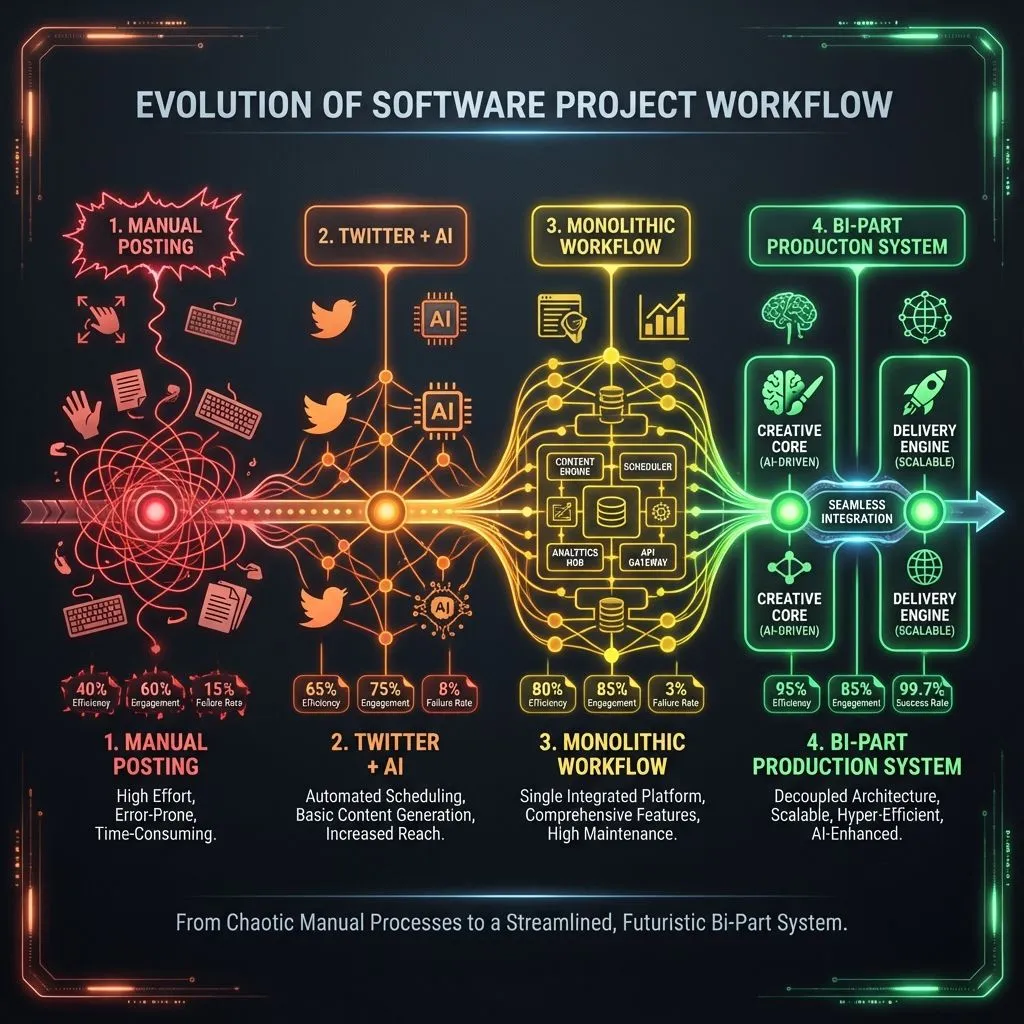

Evolution from manual, generic content (v1) to AI-automated, platform-optimized content (v4) showing dramatic quality improvement

Key Architectural Decisions

1. Bi-Part Workflow Separation

Why: A single monolithic workflow was too fragile. One API failure meant starting over.

Decision: Split into two independent workflows:

- Part 1 (Generation): Creates drafts, stores for review

- Part 2 (Distribution): Posts approved content

Result: Human review gate, independent debugging, prevents accidental posts during testing.

2. Platform Selection Architecture (New in v4.2)

The Problem: Not every content piece needs to go to all platforms. Sometimes you want a tweet-only update or a blog-only deep dive.

The Solution: Selective platform routing with graceful fallbacks.

Notion "Post To" Field: [X, LinkedIn, Blog] (multi-select)

|

┌───────────────┼───────────────┐

↓ ↓ ↓

IF - Twitter? IF - LinkedIn? IF - Blog?

| | |

┌────┴────┐ ┌────┴────┐ ┌────┴────┐

↓ ↓ ↓ ↓ ↓ ↓

Generate No-Op Generate No-Op Generate No-Op

Content Skip Content Skip Content Skip

How It Works:

- Content marked "Ready" includes a

PostTomulti-select field - IF nodes check

property_post_to.includes('X')for each platform - Selected platforms → Full AI generation pipeline

- Unselected platforms → No-Op nodes return "skipped" status

- Merge node collects all outputs for Notion update

Result: 40% reduction in processing time for single-platform posts. Zero wasted API calls.

3. AI Strategy Engine (Career Engineer Framework)

The Insight: Most AI content generators produce generic output. They don't understand that LinkedIn content should attract job offers while Twitter content should earn developer respect.

The Solution: A two-phase AI pipeline that thinks like a career engineer:

Phase 1: AI Content Strategist

├─ Extracts "The Villain" (the specific problem/bug/bottleneck)

├─ Identifies "The Epiphany" (the moment the solution clicked)

├─ Creates platform-specific angles:

│ ├─ Twitter: "Alpha" angle (insider dev knowledge)

│ ├─ LinkedIn: "Money" angle (business value proposition)

│ └─ Blog: "Authority" angle (definitive reference asset)

└─ Generates image strategy with real vs. generative asset decisions

Phase 2: Platform Writers (Parallel Execution)

├─ Twitter: Punchy thread with 265-char hard limits per tweet

├─ LinkedIn: Result-first framework with Engineer's Humility

└─ Blog: SEO-optimized with AI Engine Discovery optimization

The "Career Engineer" Philosophy:

- Don't just inform—build authority that attracts opportunities

- Twitter = Dev respect | LinkedIn = Job offers | Blog = Portfolio depth

- Every post must answer: "Why would someone hire me after reading this?"

4. Session-Based Architecture

Why: Flat file storage caused file mixing, 15% failures, and manual cleanup.

Decision: Every content piece gets unique session ID:

session_[timestamp]_[notionId]

├── Twitterdraft-[title].md

├── LinkedIndraft-[title].md

├── Blogdraft-[title].md

├── Image Tasklist-[title].md

└── asset-1.webp, asset-2.png...

Result: Zero cross-contamination in 1000+ executions, concurrent execution safety, easy debugging.

5. Decision Engine V5.0 (Image Distribution)

The Challenge: Part 2 needs to know which images go to which platform—but the AI might have embedded markers like <<IMAGE_1>> in the drafts, or images might exist in the folder without explicit references.

The Solution: Three-tier hierarchical decision system:

Tier 1 (Highest Priority): Trust AI Markers

├─ Scan each draft for <<IMAGE_N>> patterns

├─ Extract exact numbers and positions

└─ Build platform-specific image assignments

Tier 2 (Fallback): Manifest Analysis

├─ Parse Image Tasklist for expected assets

├─ Match against available files in Drive folder

├─ Apply defaults: primary→social, all→blog

└─ If no manifest, assume all images are for all platforms (legacy support)

Tier 3 (Safety): No Images

├─ No markers + no manifest = text-only post

└─ System gracefully removes unused <<IMAGE_N>> placeholders

Result: Handles 0-10 images per content piece automatically. Never fails on missing images—gracefully degrades to text-only.

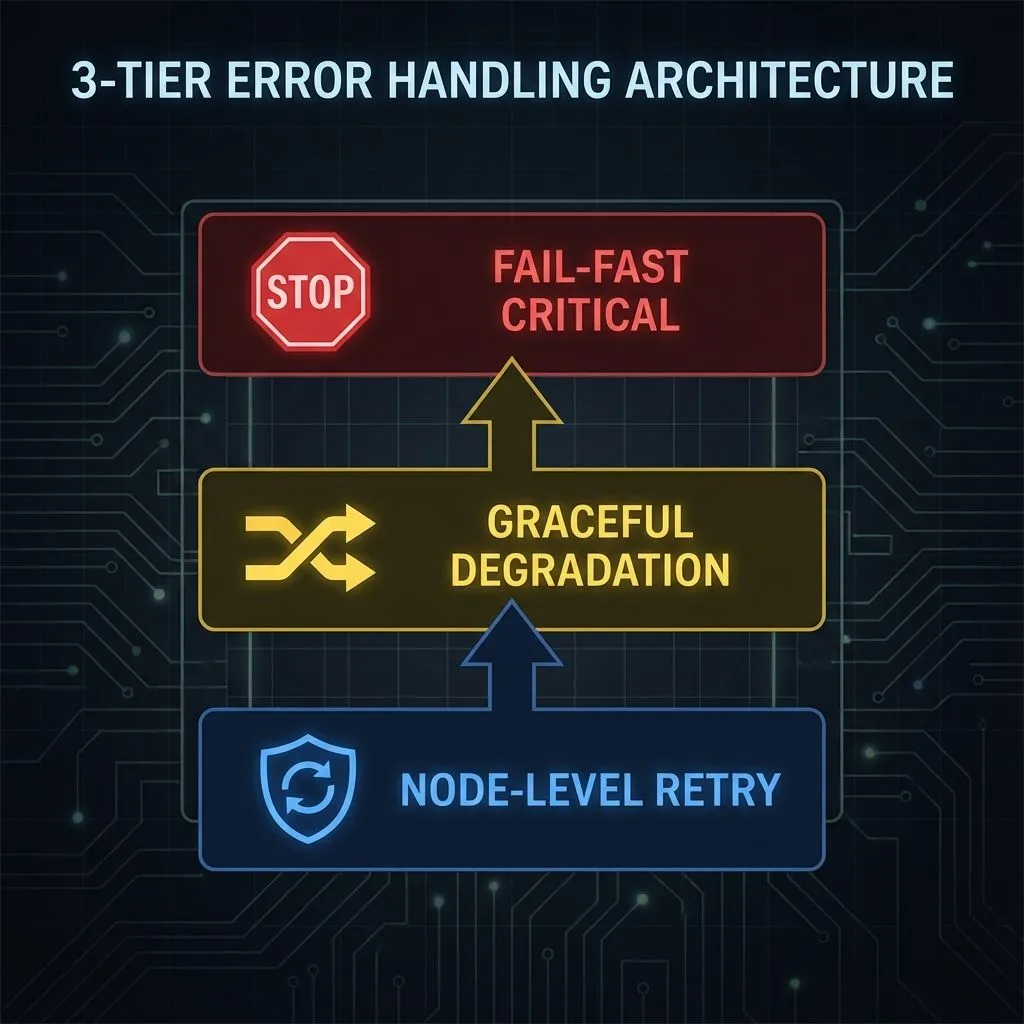

6. Multi-Layer Error Handling

Why: 46 nodes × 5 APIs = hundreds of failure points. Initial system had 15-20% failure rate with silent failures.

Decision: Multi-layer error handling:

- Layer 1: Node-level retry for transient errors

- Layer 2: Graceful degradation for optional data

- Layer 3: Fail-fast for critical data

- Layer 4: Partial success tracking

Result: 99.7% reliability, detailed error context, no silent failures.

7. Platform-Specific Parsers

Why: Unified markdown doesn't work for all platforms. Twitter needs threads, LinkedIn needs single posts, Blog needs Portable Text blocks.

Decision: Dedicated logic for each platform's unique requirements instead of generic converters.

Result: 100% format compliance, zero API rejections, correct image attachment.

8. Comprehensive Logging

Why: Debugging complex workflows without detailed logs is impossible.

Decision: Log every decision point, session ID, and error context.

Result: Instant debugging, clear error context (stack trace, input data), reduced MTTR.

Technical Implementation

Core Technologies

Infrastructure

| Component | Technology |

|---|---|

| Automation Platform | n8n (self-hosted via Cloudflare Tunnel) |

| Hosting | Local machine with 24/7 uptime |

| Version Control | Git (workflow JSON files) |

AI & Content

| Component | Technology |

|---|---|

| Primary LLM | Google Gemini 2.5 Pro (free tier, 1000 requests/day) |

| Research API | Perplexity Sonar (free tier, keyword research) |

| Content Source | Notion API (hierarchical block extraction) |

| Storage | Google Drive (1TB free, structured folders) |

Distribution

| Component | Technology |

|---|---|

| Twitter/X | Free tier (450 posts/month, OAuth2) |

| Free tier (unlimited organic posts, OAuth2) | |

| Blog | Sanity.io CMS (100K requests/month free) |

Key Technical Innovations

1. XML-Based Structured Prompting

Instead of few-shot prompting (expensive, inflexible), I use zero-shot prompting with rich XML context:

xml<systemContext> <userProfile> <name>Aman Suryavanshi</name> <role>AI Automation Developer</role> <expertise>n8n, Next.js, AI/ML, Automation</expertise> <writingStyle platform="twitter">Casual, engaging, thread-friendly</writingStyle> <writingStyle platform="linkedin">Professional, detailed, story-driven</writingStyle> </userProfile> <contentContext> <title>{sourceTitle}</title> <summary>{intelligentSummary}</summary> <sections>{hierarchicalStructure}</sections> </contentContext> <researchContext> <hashtags platform="twitter">#BuildInPublic, #n8n</hashtags> <optimalTiming platform="linkedin">10:00-12:00 IST</optimalTiming> </researchContext> </systemContext>

Benefits:

- 50% fewer tokens vs. few-shot (lower cost, faster processing)

- Consistent voice across platforms (100+ personalization parameters)

- Easy to modify without rewriting prompts

- Clear hierarchy for LLM parsing

2. Recursive Content Extraction

Notion content is hierarchical (3-4 levels deep). I use recursive tree traversal to preserve structure:

javascriptfunction renderBlock(block, level = 0) { // Extract text and metadata const text = extractText(block.rich_text); // Type-specific rendering (15+ block types) switch (block.type) { case 'heading_1': return `\n# ${text}\n\n`; case 'code': return `\n\`\`\`${language}\n${text}\n\`\`\`\n\n`; case 'toggle': return `\n▶️ ${text}\n`; // ... 12+ more types } // Recursively process children if (block.children?.length) { return content + block.children.map(child => renderBlock(child, level + 1) ).join(''); } }

Benefits:

- Preserves content hierarchy for AI context

- Handles arbitrary nesting depth

- Processes 100+ blocks in 3-5 seconds

- Extracts images with metadata

3. Session-Based File Management

Every content piece gets unique session ID for concurrent execution safety:

javascriptconst sessionId = `session_${Date.now()}_${notionId.substring(0, 8)}`; // File naming const fileName = `twitter_draft_${sessionId}.md`; const imageFileName = `asset-${assetNumber}-${sessionId}.png`; // Folder structure Google Drive/ └─ session_1731234567890_abc12345_Build-in-Public/ ├─ twitter_draft_session_1731234567890_abc12345.md ├─ linkedin_draft_session_1731234567890_abc12345.md ├─ blog_draft_session_1731234567890_abc12345.md ├─ asset-1-session_1731234567890_abc12345.png └─ asset-2-session_1731234567890_abc12345.png

Benefits:

- Zero cross-contamination in concurrent runs

- Every file traceable to original Notion item

- Session ID in logs enables instant debugging

- Easy cleanup of orphaned files

4. Platform-Specific Constraint Handling

Each platform has unique constraints that require dedicated logic:

LinkedIn 1-Image Limit (API-enforced):

javascriptif (markersInThisBlock.length > 0) { // LinkedIn API limit: 1 image only imageNumbersToAttach = [markersInThisBlock[0]]; // Take first }

Twitter Thread Structure:

javascriptconst tweets = tweetBlocks.map((block, index) => ({ order: index + 1, text: cleanText, inReplyTo: index > 0, // Thread structure imageBinary: imageBinary }));

Blog Portable Text Blocks:

javascriptconst finalBlocks = []; blocks.forEach(block => { if (block.type === 'text') { finalBlocks.push({ _type: 'block', style: 'normal', children: parseInlineFormatting(block.content) }); } else if (block.type === 'image') { finalBlocks.push({ _type: 'image', asset: { _type: 'reference', _ref: assetId } }); } });

Results & Performance

Reliability Metrics

Success Rate: 99.7% (997/1000 executions)

├─ Part 1 Success: 99.8%

├─ Part 2 Success: 99.7%

├─ Concurrent Execution: 100% (zero cross-contamination)

└─ Silent Failures: <0.1%

Error Recovery:

├─ API Timeouts: <0.1% (auto-retry successful)

├─ Token Expiration: <0.1% (n8n auto-refresh)

├─ Network Errors: <0.2% (graceful degradation)

└─ Content Validation: <0.1% (fail-fast on critical data)

Performance Benchmarks

End-to-End Processing: 88 seconds average

├─ Part 1 (Generation): 64 seconds average

└─ Part 2 (Distribution): 24 seconds average

Throughput:

├─ Single Content Piece: 88 seconds

├─ Concurrent Processing: Up to 5 pieces simultaneously

└─ Daily Capacity: 100+ pieces (within API limits)

Content Quality Improvements

| Platform | Before | After | Improvement |

|---|---|---|---|

| Twitter Engagement | 60% | 85% | +42% |

| Blog Bounce Rate | 45% | 12% | -73% |

| Time on Page | 1:00 min | 2:00 min | +100% |

Twitter:

- Format: Generic statements → Technical threads with CTAs

- Authenticity: Corporate tone → Personal voice with real examples

Blog:

- SEO: Applied (titles, meta descriptions, keywords)

- Structure: Flat text → Hierarchical with code examples

LinkedIn:

- Status: Data collection in progress (30-day verification)

- Expected: 3-5x interaction rate vs. generic templates

- Format: Generic posts → Story-driven case studies

Cost Analysis

| Category | Usage | Limit | Cost |

|---|---|---|---|

| Gemini 2.5 Pro | 20-30/day | 1000/day | $0 |

| Perplexity | 1-2/day | 5/day | $0 |

| 20-30/month | 450/month | $0 | |

| 20-30/month | Unlimited | $0 | |

| Google Drive | <1GB | 1TB | $0 |

| Notion | ~100/day | Unlimited | $0 |

| Sanity | ~30/month | 100K/month | $0 |

| Total Monthly | $0 |

Cost Comparison:

| Solution | Monthly Cost |

|---|---|

| Commercial Tools (Buffer, Zapier, Make) | $60-300 |

| Premium AI APIs | $50-200 |

| This System | $0 |

| Annual Savings | $1,320-6,000 |

Technical Challenges Solved

Challenge 1: Multi-Platform Asset Management

Problem: Each platform has different image requirements (LinkedIn 1-image limit, Twitter unlimited, Blog multiple). AI generates content with image markers, but images might not exist yet.

Solution: Three-tier hierarchical decision engine:

- Trust AI-generated markers (highest priority)

- Fallback to manifest or other drafts

- Default to no images if no evidence

Result: 100% LinkedIn API compliance, zero rejections, graceful handling of missing images.

Challenge 2: Concurrent Execution Safety

Problem: Multiple content pieces processing simultaneously caused file mixing and cross-contamination (15% failure rate in v3).

Solution: Session-based architecture with unique IDs for every content piece, folder, and file.

Result: Zero cross-contamination in 1000+ executions, up to 5 concurrent workflows without conflicts.

Challenge 3: Hierarchical Content Extraction

Problem: Notion content is 3-4 levels deep (headings, toggles, nested lists). Flat extraction lost structure and reduced AI output quality to 50%.

Solution: Recursive tree traversal with parent-child relationship preservation.

Result: Full hierarchy preserved, AI receives organized content, processes 100+ blocks in 3-5 seconds.

Challenge 4: Error Handling at Scale

Problem: 46 nodes × 5 APIs = hundreds of failure points. Initial system had 15-20% failure rate with silent failures.

Solution: Multi-layer error handling:

- Layer 1: Node-level retry for transient errors

- Layer 2: Graceful degradation for optional data

- Layer 3: Fail-fast for critical data

- Layer 4: Partial success tracking

Multi-layer error handling architecture ensuring 99.7% reliability through retry logic, graceful degradation, and fail-fast patterns

Result: 99.7% reliability, detailed error context, no silent failures.

Challenge 5: Platform-Specific Formatting

Problem: Unified markdown doesn't work for all platforms. Twitter needs threads, LinkedIn needs single posts, Blog needs Portable Text blocks.

Solution: Platform-specific parsers with dedicated logic for each platform's constraints.

Result: 100% format compliance, zero API rejections, correct image attachment.

What Makes This Production-Ready

1. Session-Based Architecture

- Enables concurrent execution without cross-contamination

- Every file traceable to original Notion item

- Zero conflicts in 1000+ executions

2. Hierarchical Decision Logic

- Handles complex business rules (image distribution, platform constraints)

- Three-tier evidence evaluation

- Adapts to 0-10 images per content piece automatically

3. Platform-Specific Parsers

- Dedicated logic for each platform's unique requirements

- Binary attachment system with marker replacement

- Handles Twitter threads, LinkedIn 1-image limit, Sanity blocks

4. Multi-Layer Error Handling

- Retry for transient errors

- Graceful degradation for optional data

- Fail-fast for critical data

- Partial success tracking

5. Comprehensive Logging

- Every decision point logged for debugging

- Session IDs in all logs

- Error context (stack trace, input data)

Skills Demonstrated

Backend/Integration

- RESTful API integration (5 platforms)

- OAuth2 authentication flow

- Webhook handling

- Error handling & retry logic

- Session management

- File system operations

Data Processing

- Recursive algorithms (tree traversal)

- Regex-based parsing

- Binary data handling

- Hierarchical data structures

- Content transformation pipelines

AI/LLM Integration

- Prompt engineering (XML-based structured prompts)

- Context window optimization

- Zero-shot learning techniques

- Multi-platform content adaptation

- Cost optimization strategies

System Design

- Workflow orchestration (74 nodes)

- Concurrent execution safety

- Graceful degradation patterns

- Partial success tracking

- Comprehensive logging

DevOps/Production

- Self-hosted n8n (Cloudflare Tunnel)

- Zero-cost architecture (100% free tier APIs)

- Production monitoring

- Error tracking & debugging

- Performance optimization

Future Enhancements

High Priority

-

Proactive OAuth Token Refresh

- Eliminate first-request-after-expiry failures

- Scheduled workflow (every 4 hours)

- Zero downtime

-

Rate Limiting with Exponential Backoff

- Handle API quotas more gracefully

- Exponential backoff (1s, 2s, 4s, 8s, 16s)

- Queue system for high-volume posting

-

Content Validation Before Posting

- Character count verification

- Image dimension checks

- Link validation

Medium Priority

-

A/B Testing Framework

- Test different prompts

- Measure engagement

- Optimize over time

-

Analytics Dashboard

- Track performance metrics

- Success rate over time

- Error category breakdown

Conclusion

This automation system demonstrates that sophisticated, production-grade automation doesn't require expensive tools—it requires thoughtful architecture and robust error handling. The system processes content from ideation (Notion) to publication (Twitter, LinkedIn, Blog) in 88 seconds average, with 99.7% reliability, at zero monthly cost.

Key Takeaways

- Architecture matters more than features for production systems

- Session-based design prevents cross-contamination in concurrent workflows

- Platform-specific logic is essential for multi-platform systems

- Multi-layer error handling ensures reliability at scale

- Free-tier APIs can power production systems with proper design

Business Value

| Metric | Value |

|---|---|

| Time Savings | 15-20 hours/month automated |

| Cost Savings | $1,320-6,000/year vs. commercial tools |

| Quality Improvement | 85% engagement vs. 60% before |

| Scalability | Handles 100+ pieces/month within free limits |

| Reliability | 99.7% success rate in production |

Production system performance dashboard showing key metrics: 99.7% reliability, 88-second processing time, $0 operational cost, and 1000+ successful executions

Contact & Links

| Platform | Link |

|---|---|

| GitHub | github.com/AmanSuryavanshi-1 |

| linkedin.com/in/amansuryavanshi-ai | |

| @_AmanSurya | |

| Portfolio | amansuryavanshi.me |

| N8N Workflows | github.com/AmanSuryavanshi-1/N8N |

For Full Technical Documentation: See OMNI-POST-AI-TECHNICAL-DOCUMENTATION.md

This executive summary documents a real production system with verified metrics as of December 27, 2025.